![[ti]gaffe in the news [ti]gaffe in the news](https://dmargulis.com/wp-content/uploads/2022/02/tigaffe.jpg)

occasional essays on working with words and pictures

—writing, editing, typographic design, web design, and publishing—

from the perspective of a guy who has been putting squiggly marks on paper for over five decades and on the computer monitor for over two decades

Monday, March 31, 2008

Chimera spotted in the wild. Or spotted chimera in the wild. Whatever.

Thanks to Karen Lew for the heads up on this headline [ti]gaffe...

![[ti]gaffe in the news [ti]gaffe in the news](https://dmargulis.com/wp-content/uploads/2022/02/tigaffe.jpg)

![[ti]gaffe in the news [ti]gaffe in the news](https://dmargulis.com/wp-content/uploads/2022/02/tigaffe.jpg)

Sunday, March 23, 2008

dot dot dot

JPEG, TIFF, GIF, PNG. Hi-res. Lo-res. PPI. DPI. LPI. Who cares? All you want is to have pictures in your book.

Okay, I’m overdue for a blog post, and this is a topic I’ve wanted to tackle. But it’s a bit daunting, and it will require a clear head. So go have a cup of coffee and come back. It’s okay. I’ll wait.

1. Rights

“I found it on the Web” is insufficient justification for believing you have the right to use an image, nor is “I scanned it from a book” or “I paid the photographer for it.” There are exceptions, such as images that are voluntarily placed into the public domain by their creators (see Wikimedia Commons, for example) or that have aged into the public domain under copyright law. Otherwise, someone owns the rights to the image, and if that someone isn’t you, then you need to secure the right to use the image in the way you intend to use it. This may entail paying a license fee, purchasing the exclusive rights, or other arrangements. I’m not a lawyer, and I’m not going to advise you on the details. But if you send me images to put in your book or on your website, I want an explanation from you of why you have the right to use those images. “I created the picture myself” is the easiest explanation.

A further explanation of why “I paid the photographer for it” is insufficient: Studio photographers own the images they take. They sell you prints of those images, but you do not have the right to make copies of those prints unless you have a written agreement that allows you to.

You can hire a professional photographer on a work-for-hire basis (in most states). Or you can pay a one-time fee (to Olan Mills, for example) to obtain reproduction rights. So sometimes paying the photographer is sufficient. But it depends what you paid for, exactly.

2. Pixels

The world as we experience it does not consist of pixels. When the artist brushes paint onto a canvas, the paint does not arrange itself into a rectangular array of dots. If you were to take a photograph on film, the image would not consist of a rectangular array of discrete dots. However, using modern technologies, we need dots to reproduce an image either on a monitor or on paper.

Whether you take a photo with a digital camera or scan a photographic print with a scanner, you end up with pixels. A pixel is a rectangle of a specific color that is the mathematical average of the colors found within that particular rectangular region of the original scene or photograph. Obviously, the size of the rectangle is important, because each rectangle is a solid color. An array of four pixels by four pixels might give you enough information to know that it represented a person’s face, but it would not give you enough information to identify the person with any degreee of certainty. From the point of view of wanting to represent the real world, therefore, more pixels is better than fewer pixels.

However, a pixel is also a physical region on the imaging surface of a digital camera and on your computer monitor, and there are physical and engineering limits to how small that region can be. So there is a practical limit to number of pixels in an image. A large scanner can generate a file containing a much larger number of pixels than your hand-held camera has room for or than you can display at one time on a monitor.

In any case, image resolution is the dimensions of an image in pixels. If the image is 1200 pixels high by 1600 pixels wide, then its resolution is 1200 × 1600. Notice that there are no dimensions associated with this expression.

3. Pixels per inch

Suppose your computer monitor has 100 pixels per inch (ppi) in each direction. A 1200 × 1600 image, viewed at its natural size (100%), fills an area 12 inches high by 16 inches wide (which may be larger than your monitor, of course). You can view the same image at a different scale, but when you do so, the software you are using resamples the image if you reduce the scale, and it dithers the image if you increase the scale. Dithering is the process of interpolating new pixels between existing pixels and calculating an average color of the neighboring pixels to apply to each new one. This results in loss of sharpness, of course.

To print an image in a book, a good practice is to provide 300 pixels per inch. So a 1200 × 1600 image, printed at its natural size, will cover four inches by five and one-third inches on the printed page. It can be reduced to cover less area without damage, but enlarging it to cover more area requires dithering to increase the number of pixels (the image resolution) and results in loss of sharpness.

By the way, 300 pixels per inch works well for photographs. If you were to scan a line drawing, though, 1200 pixels per inch would be needed to ensure smooth lines with clean edges. Line art works better if it is created from scratch in a vector drawing program, which eliminates any concerns about resolution.

If you want a larger image on the printed page, you have to start with more pixels, either by using a higher-resolution camera or scanning a larger print.

4. What color is your pixel?

On a monitor, a single pixel is composed of the three transmissive primary colors (red, green, and blue), each of which can be adjusted to any of (typically) 256 levels, giving you 16,777,216 possible colors. But printing doesn’t work that way. In printing, what varies is the size of the colored (or black) dot; and instead of the additive (transmissive) primaries, the colors are composed of the subtractive (reflective) primaries, cyan, magenta, and blue. To represent a small area of some color on the printed page, we use variously sized overlapping ovals of those three colors and black (abbreviated with K to avoid confusion with blue). So we talk about RGB images for the computer monitor and CMYK images for the printed page.

A monitor—even a well-calibrated high-end monitor—can only provide an approximation of the color that will print on paper. The reason is basic physics. Ink on paper gets the image to your eyes by reflection. The monitor gets the image to your eyes by transmission. Reflection is a subtractive process (all the wavelengths of incoming white light are absorbed by the ink pigments except the wavelengths for cyan, magenta, and yellow, which are reflected back to you; the white paper not covered by those three pigments reflects back whatever white—mixed wavelength—light not absorbed by the black ink). Transmission is an additive process (red, green, and blue wavelengths are added to create the impression of the various colors, including white). The full range of colors available in each system, called the gamut, is different for the CMYK subtractive system than for the RGB additive system. So there are colors that can be represented on the monitor that you will not see on paper.

5. Dots per inch

An output device like a desktop laser printer or (more important for this discussion) a filmsetter or a computer-to-plate (CTP) system is unlike a monitor. It can only paint a fixed dot black or white. There are no shades of gray. There are no colors. (That’s why four-color printing requires four printing plates, one black and white plate for each of the CMYK inks. It’s the ink that provides the color, not the plate.)

So how do we get from your pixel, with one of millions of colors, to a dot on an output device that can only be black or white? Well, suppose we have a 2400 dot per inch (dpi) output device (for simplicity—higher numbers are common). Each pixel of your original 300 ppi image is going to be represented by a square array of 64 dots (8 × 8) for each of the four printing inks. For a monochrome (black & white) image, this means there are 64 possible levels of gray for that 8 × 8 region. And the same would be true for each of the four inks for a color image. That gives us enough precision to represent the color of the pixel and to keep the image as sharp-looking as it was before.

6. Lines per inch

As I said above, an image is printed on paper using oval dots, in one color or four colors. The spacing of these dots is called the line screen. A typical monochrome image in a newspaper is printed at 85 lines per inch (lpi), and you can easily see the individual dots with the naked eye. Most book printing is done at 133 lpi, with better color printing done at 150 lpi or higher.

The name line screen comes from the way halftone images (those arrays of varying size ovals) were created photographically. A piece of film with ruled lines, placed between the image being photographed and a piece of unexposed lithographic film, created an interference pattern that resulted in the pattern of dots on the film when it was developed. The spacing of the ruled lines determined the spacing of the halftone dots.

It is not typical, at least with conventional printing methods, to put ink on paper at a halftone line screen of 300 lines per inch. So the imagesetter emulates an old halftone screen by averaging some number of your original pixels together. For example, to print at 150 lines per inch, a 16 × 16 dot region (four of your original 300 ppi pixels) would be averaged to create one halftone dot. The size of the dot, drawn as an oval on that 16 × 16 region for each of the four colors, would determine the apparent color of the printed image.

In this example, we’ve taken a fixed-resolution image (1600 × 1200), converted it to a 300 ppi rendering, sent it to a 2400 dpi output device, and converted it to a 150 lpi printing plate.

7. A word on image file formats

The native file format for most photographic images is JPEG. If you take a digital photo with your camera or if you purchase a royalty-free image from a website, you are going to be starting with a JPEG image.

What you need to understand about JPEG is that it is what is called a lossy format. This means that every time you resize it, crop it, adjust colors, or make any other changes and then save it, the compression algorithm is run again, resulting in the averaging of neighboring pixels and a loss of sharpness. When you save a JPEG, in most image processing software, you are afforded an opportunity to specify a quality level. At the highest level, you may not actually lose any sharpness. However, the safest way to handle a JPEG is to convert it at once to a lossless format.

The lossless format used for printing on paper is TIFF. You may be using some intermediate lossless format, such as Photoshop PSD, while you are working on the image, but when you are done, save it as a TIFF. You can always save a copy of the TIFF as a JPEG if you need a web image. But you cannot go the other direction. That’s what lossy means.

You may also encounter GIF and PNG images. They are typically not used for printing and should be converted to TIFFs as well.

Questions?

All of the above is second nature to people who work in the graphic arts, but it is generally confounding for authors. I’ve simplified somewhat (intentionally) in trying to lay it out as clearly as I can, but there are sure to be questions. Please feel free to post questions to the comment stream or to email me directly.

Okay, I’m overdue for a blog post, and this is a topic I’ve wanted to tackle. But it’s a bit daunting, and it will require a clear head. So go have a cup of coffee and come back. It’s okay. I’ll wait.

1. Rights

“I found it on the Web” is insufficient justification for believing you have the right to use an image, nor is “I scanned it from a book” or “I paid the photographer for it.” There are exceptions, such as images that are voluntarily placed into the public domain by their creators (see Wikimedia Commons, for example) or that have aged into the public domain under copyright law. Otherwise, someone owns the rights to the image, and if that someone isn’t you, then you need to secure the right to use the image in the way you intend to use it. This may entail paying a license fee, purchasing the exclusive rights, or other arrangements. I’m not a lawyer, and I’m not going to advise you on the details. But if you send me images to put in your book or on your website, I want an explanation from you of why you have the right to use those images. “I created the picture myself” is the easiest explanation.

A further explanation of why “I paid the photographer for it” is insufficient: Studio photographers own the images they take. They sell you prints of those images, but you do not have the right to make copies of those prints unless you have a written agreement that allows you to.

You can hire a professional photographer on a work-for-hire basis (in most states). Or you can pay a one-time fee (to Olan Mills, for example) to obtain reproduction rights. So sometimes paying the photographer is sufficient. But it depends what you paid for, exactly.

2. Pixels

The world as we experience it does not consist of pixels. When the artist brushes paint onto a canvas, the paint does not arrange itself into a rectangular array of dots. If you were to take a photograph on film, the image would not consist of a rectangular array of discrete dots. However, using modern technologies, we need dots to reproduce an image either on a monitor or on paper.

Whether you take a photo with a digital camera or scan a photographic print with a scanner, you end up with pixels. A pixel is a rectangle of a specific color that is the mathematical average of the colors found within that particular rectangular region of the original scene or photograph. Obviously, the size of the rectangle is important, because each rectangle is a solid color. An array of four pixels by four pixels might give you enough information to know that it represented a person’s face, but it would not give you enough information to identify the person with any degreee of certainty. From the point of view of wanting to represent the real world, therefore, more pixels is better than fewer pixels.

However, a pixel is also a physical region on the imaging surface of a digital camera and on your computer monitor, and there are physical and engineering limits to how small that region can be. So there is a practical limit to number of pixels in an image. A large scanner can generate a file containing a much larger number of pixels than your hand-held camera has room for or than you can display at one time on a monitor.

In any case, image resolution is the dimensions of an image in pixels. If the image is 1200 pixels high by 1600 pixels wide, then its resolution is 1200 × 1600. Notice that there are no dimensions associated with this expression.

3. Pixels per inch

Suppose your computer monitor has 100 pixels per inch (ppi) in each direction. A 1200 × 1600 image, viewed at its natural size (100%), fills an area 12 inches high by 16 inches wide (which may be larger than your monitor, of course). You can view the same image at a different scale, but when you do so, the software you are using resamples the image if you reduce the scale, and it dithers the image if you increase the scale. Dithering is the process of interpolating new pixels between existing pixels and calculating an average color of the neighboring pixels to apply to each new one. This results in loss of sharpness, of course.

To print an image in a book, a good practice is to provide 300 pixels per inch. So a 1200 × 1600 image, printed at its natural size, will cover four inches by five and one-third inches on the printed page. It can be reduced to cover less area without damage, but enlarging it to cover more area requires dithering to increase the number of pixels (the image resolution) and results in loss of sharpness.

By the way, 300 pixels per inch works well for photographs. If you were to scan a line drawing, though, 1200 pixels per inch would be needed to ensure smooth lines with clean edges. Line art works better if it is created from scratch in a vector drawing program, which eliminates any concerns about resolution.

If you want a larger image on the printed page, you have to start with more pixels, either by using a higher-resolution camera or scanning a larger print.

4. What color is your pixel?

On a monitor, a single pixel is composed of the three transmissive primary colors (red, green, and blue), each of which can be adjusted to any of (typically) 256 levels, giving you 16,777,216 possible colors. But printing doesn’t work that way. In printing, what varies is the size of the colored (or black) dot; and instead of the additive (transmissive) primaries, the colors are composed of the subtractive (reflective) primaries, cyan, magenta, and blue. To represent a small area of some color on the printed page, we use variously sized overlapping ovals of those three colors and black (abbreviated with K to avoid confusion with blue). So we talk about RGB images for the computer monitor and CMYK images for the printed page.

A monitor—even a well-calibrated high-end monitor—can only provide an approximation of the color that will print on paper. The reason is basic physics. Ink on paper gets the image to your eyes by reflection. The monitor gets the image to your eyes by transmission. Reflection is a subtractive process (all the wavelengths of incoming white light are absorbed by the ink pigments except the wavelengths for cyan, magenta, and yellow, which are reflected back to you; the white paper not covered by those three pigments reflects back whatever white—mixed wavelength—light not absorbed by the black ink). Transmission is an additive process (red, green, and blue wavelengths are added to create the impression of the various colors, including white). The full range of colors available in each system, called the gamut, is different for the CMYK subtractive system than for the RGB additive system. So there are colors that can be represented on the monitor that you will not see on paper.

5. Dots per inch

An output device like a desktop laser printer or (more important for this discussion) a filmsetter or a computer-to-plate (CTP) system is unlike a monitor. It can only paint a fixed dot black or white. There are no shades of gray. There are no colors. (That’s why four-color printing requires four printing plates, one black and white plate for each of the CMYK inks. It’s the ink that provides the color, not the plate.)

So how do we get from your pixel, with one of millions of colors, to a dot on an output device that can only be black or white? Well, suppose we have a 2400 dot per inch (dpi) output device (for simplicity—higher numbers are common). Each pixel of your original 300 ppi image is going to be represented by a square array of 64 dots (8 × 8) for each of the four printing inks. For a monochrome (black & white) image, this means there are 64 possible levels of gray for that 8 × 8 region. And the same would be true for each of the four inks for a color image. That gives us enough precision to represent the color of the pixel and to keep the image as sharp-looking as it was before.

6. Lines per inch

As I said above, an image is printed on paper using oval dots, in one color or four colors. The spacing of these dots is called the line screen. A typical monochrome image in a newspaper is printed at 85 lines per inch (lpi), and you can easily see the individual dots with the naked eye. Most book printing is done at 133 lpi, with better color printing done at 150 lpi or higher.

The name line screen comes from the way halftone images (those arrays of varying size ovals) were created photographically. A piece of film with ruled lines, placed between the image being photographed and a piece of unexposed lithographic film, created an interference pattern that resulted in the pattern of dots on the film when it was developed. The spacing of the ruled lines determined the spacing of the halftone dots.

It is not typical, at least with conventional printing methods, to put ink on paper at a halftone line screen of 300 lines per inch. So the imagesetter emulates an old halftone screen by averaging some number of your original pixels together. For example, to print at 150 lines per inch, a 16 × 16 dot region (four of your original 300 ppi pixels) would be averaged to create one halftone dot. The size of the dot, drawn as an oval on that 16 × 16 region for each of the four colors, would determine the apparent color of the printed image.

In this example, we’ve taken a fixed-resolution image (1600 × 1200), converted it to a 300 ppi rendering, sent it to a 2400 dpi output device, and converted it to a 150 lpi printing plate.

7. A word on image file formats

The native file format for most photographic images is JPEG. If you take a digital photo with your camera or if you purchase a royalty-free image from a website, you are going to be starting with a JPEG image.

What you need to understand about JPEG is that it is what is called a lossy format. This means that every time you resize it, crop it, adjust colors, or make any other changes and then save it, the compression algorithm is run again, resulting in the averaging of neighboring pixels and a loss of sharpness. When you save a JPEG, in most image processing software, you are afforded an opportunity to specify a quality level. At the highest level, you may not actually lose any sharpness. However, the safest way to handle a JPEG is to convert it at once to a lossless format.

The lossless format used for printing on paper is TIFF. You may be using some intermediate lossless format, such as Photoshop PSD, while you are working on the image, but when you are done, save it as a TIFF. You can always save a copy of the TIFF as a JPEG if you need a web image. But you cannot go the other direction. That’s what lossy means.

You may also encounter GIF and PNG images. They are typically not used for printing and should be converted to TIFFs as well.

Questions?

All of the above is second nature to people who work in the graphic arts, but it is generally confounding for authors. I’ve simplified somewhat (intentionally) in trying to lay it out as clearly as I can, but there are sure to be questions. Please feel free to post questions to the comment stream or to email me directly.

Friday, March 14, 2008

Corporate communications notes from all over: the corner of Telephone and Telephone

Several days ago I got a phone call purporting to be from someone at my ophthalmologist’s office, asking whether I would be interested in participating in a study of a new drug for the treatment of dry eye, a condition noted on my chart, apparently. Show up for four appointments; use the eyedrops they’ll provide four times a day for a month. Collect $500.

Cool. Why not? Maybe the drops will help. Easy money.

So I signed up, with my first appointment scheduled for 8:30 this morning. They called Wednesday to remind me. Yes, I’ll be there. Oh, did we mention the referral fee if you know anyone else who might be a candidate? Why no, you didn’t. I mentioned the study to a friend who I’ve seen putting drops in his eyes frequently (with no claim that I know what condition he’s using the drops for). Yes, he’d be interested, too. Great. He’ll get $500 and I’ll get $100 for referring him. Excellent plan.

So I showed up this morning, fifteen minutes early in case there was paperwork, with my list of meds, as requested, and I walked up to the desk. What study? Which doctor called? Are you sure it was for today? Just take a seat.

Which I did. Eye doctor’s waiting room with no magazines. Go figure. And I hadn’t brought any reading matter of my own, foolishly. Tick tock. Eavesdrop on other people’s uninteresting chitchat. Twiddle thumbs. At 8:50 I went back to the desk and asked if we were waiting for someone to arrive. The office manager then picked up the phone and called the practice’s other office, forty-five minutes away, in a different city. Yep. That’s where the study is being conducted. No, the person who called me—in New Haven—did not tell me that the study was not being conducted in New Haven. No, I was not aware that the practice had another office. No, I will not be participating. Yes, I will be getting a check for my inconvenience, which is not a bad way of apologizing for a screw-up.

But here’s the lesson

Does your business card include the area code with your phone number? Does your website? Is there some indication, on every piece of business collateral you produce, of where you are located? When a customer calls the number listed in the local phone book for your business, do they reach someone at the local office or are they speaking with someone at a call center who has no clue what the local weather and traffic conditions are at the moment or whether you’re located across the street from Pizza Hut?

It’s a little thing. Tell people where you are calling from and where you are asking them to go.

Postscript to readers under forty

Before the age of cell phones, city streets had telephone booths containing public pay telephones (you put in one or more coins to be connected to the number you dialed—on a dial, not a keypad, before the 1960s). The standard design for many years was a square booth (executed in different materials in different decades) at the top of which was an illuminated panel with the word “Telephone” on each of the four sides. An old joke has a man stumbling, inebriated, out of a bar and calling home for a ride. When asked for his location, he sticks his head out of the phone booth, glances up, and responds, “I’m at the corner of Telephone and Telephone.”

Cool. Why not? Maybe the drops will help. Easy money.

So I signed up, with my first appointment scheduled for 8:30 this morning. They called Wednesday to remind me. Yes, I’ll be there. Oh, did we mention the referral fee if you know anyone else who might be a candidate? Why no, you didn’t. I mentioned the study to a friend who I’ve seen putting drops in his eyes frequently (with no claim that I know what condition he’s using the drops for). Yes, he’d be interested, too. Great. He’ll get $500 and I’ll get $100 for referring him. Excellent plan.

So I showed up this morning, fifteen minutes early in case there was paperwork, with my list of meds, as requested, and I walked up to the desk. What study? Which doctor called? Are you sure it was for today? Just take a seat.

Which I did. Eye doctor’s waiting room with no magazines. Go figure. And I hadn’t brought any reading matter of my own, foolishly. Tick tock. Eavesdrop on other people’s uninteresting chitchat. Twiddle thumbs. At 8:50 I went back to the desk and asked if we were waiting for someone to arrive. The office manager then picked up the phone and called the practice’s other office, forty-five minutes away, in a different city. Yep. That’s where the study is being conducted. No, the person who called me—in New Haven—did not tell me that the study was not being conducted in New Haven. No, I was not aware that the practice had another office. No, I will not be participating. Yes, I will be getting a check for my inconvenience, which is not a bad way of apologizing for a screw-up.

But here’s the lesson

Does your business card include the area code with your phone number? Does your website? Is there some indication, on every piece of business collateral you produce, of where you are located? When a customer calls the number listed in the local phone book for your business, do they reach someone at the local office or are they speaking with someone at a call center who has no clue what the local weather and traffic conditions are at the moment or whether you’re located across the street from Pizza Hut?

It’s a little thing. Tell people where you are calling from and where you are asking them to go.

Postscript to readers under forty

Before the age of cell phones, city streets had telephone booths containing public pay telephones (you put in one or more coins to be connected to the number you dialed—on a dial, not a keypad, before the 1960s). The standard design for many years was a square booth (executed in different materials in different decades) at the top of which was an illuminated panel with the word “Telephone” on each of the four sides. An old joke has a man stumbling, inebriated, out of a bar and calling home for a ride. When asked for his location, he sticks his head out of the phone booth, glances up, and responds, “I’m at the corner of Telephone and Telephone.”

Tuesday, March 04, 2008

Much silliness about grammar

If you did not know that today is National Grammar Day, good for you. It is an occasion to celebrate, though, the surprising acknowledgment that linguists do not, in fact, hate editors.

Saturday, March 01, 2008

Mix and match typefaces

I’ve had a couple of reminders lately of ways the history of type informs design.

The grouping of typefaces into families is a relatively modern notion. When Aldus Manutius introduced the first italic type, in 1501, his purpose was merely to get more words on a page so he could sell his books cheaper than his competitors. Entire books were set in his fonts.

Nowadays, we think of italics as a way to emphasize a word or set off a book title, for example. And we expect the italic face to complement the roman face it is associated with. We think of a typeface family, as a rule, the way we see it used in a word processor: You got yer roman (“normal”), yer italic, yer bold, and yer bold italic. That’s the available spectrum and be darn glad we give you that many choices. If you want more, change the color or add an underscore. Now go away, kid, and don’t bother us.

The other day, on one of the online discussion groups devoted to digital fonts, a fellow pointed out that he has type families consisting of many more than four fonts. A given family might include fonts labeled light, thin, book, medium, semibold, bold, heavy, and black, with both roman and italic fonts in each weight. However, the word processor he was using, Microsoft Word, would not give him access to all those weights. It offered only normal, italic, bold, and bold italic, apparently making its own decisions as to which of the many available fonts was to be mapped to each of those labels. While there is a technical solution that involves opening the font files in a font editor and changing some internal names, that is beside the point. The real solution is to use word processors for word processing and use page layout software for typographic design. Page layout software is not stuck with the four-font paradigm but rather provides access to all the fonts in the typeface family.

The way we got into this fix with word processors gets back to the history of type. The notion of a coordinated set of fonts, with italic and bold faces used for emphasis within a roman text, goes back a couple hundred years. By the time mechanical typesetting was introduced, toward the end of the nineteenth century, this was a fixed idea. The Merganthaler Linotype carried two letterforms on each matrix—typically roman and italic in the book industry and roman and bold in the newspaper industry—which, incidentally, had to have the same width, by definition.

The typewriter, introduced during the same period, was a way of getting letters onto paper without having to pay a compositor to set type by hand or, a bit later, by typesetting machine. But the typewriter was a one-trick pony in terms of font. If you wanted emphasis, your choices were underlining or all caps. The great advantage of the typewriter was cost and efficiency; it was not a typesetting device except in the most rudimentary sense.

But by the late 1960s, the IBM Selectric popularized the notion (introduced earlier by the Varityper, of combining different fonts in a document.

The first word processors were modeled on the typewriter, but when desktop publishing came along (with digital fonts, laser printers, and a wysiwyg user interface), the word processors adapted—somewhat. And here we are today, with normal, italic, bold, and bold italic.

But what brought this topic up was an interesting project I’m working on.

The book is a joint travel diary. The editor of the diary wanted a way to distinguish the two writers typographically. After some experiments with other options, we settled on using roman type for one writer and italic for the other.

But there was a problem. The italic face that “belongs to” the roman we chose is fine for its intended use, but it’s a little too coordinated with the roman to give the idea that these are two people of equal stature. Assigning the roman to the husband and the italic to the wife seemed to subtly suggest a subordinate relationship. The editor and I both thought it important to give her her own distinct typeface.

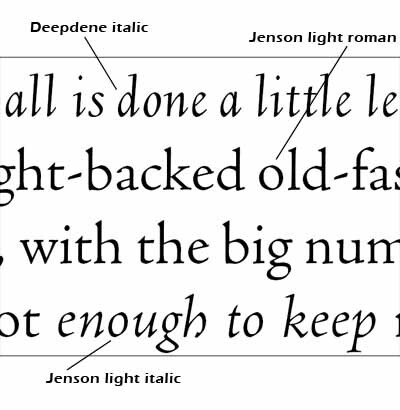

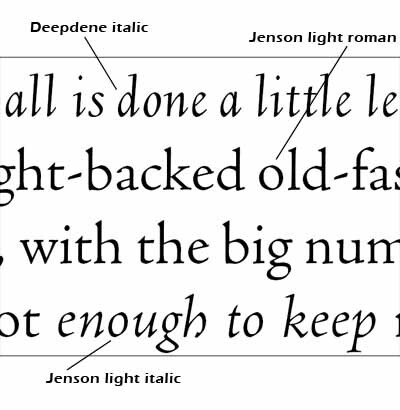

History to the rescue. The subordinate italic to the roman face (Adobe Jenson) began as an unrelated face in a different century. Knowing that, I decided to select a different italic based on Arrighi’s work, one designed in the first half of the twentieth century by the famous American designer Frederic W. Goudy.

Goudy’s Deepdene italic, as you can see, is much narrower and more upright than the Jenson italic, but the letterforms are of the same general historic period and they complement the Jenson roman every bit as well as the so-called Jenson italic. It’s also a bit lighter in weight than the standard weight of the Jenson. Fortunately, a light weight is available that is a better match. The two typefaces have a slightly different x-height, so the point size of the italic had to be be cheated a bit, too; but that’s a minor fix.

With modern software, it is easy enough to set up character styles that make the mixing an matching of fonts from different typeface families fairly easy to do. The typographer has to take some care to set this up correctly; but after that, the work goes smoothly.

Details matter. History matters.

Goudy’s Deepdene italic, as you can see, is much narrower and more upright than the Jenson italic, but the letterforms are of the same general historic period and they complement the Jenson roman every bit as well as the so-called Jenson italic. It’s also a bit lighter in weight than the standard weight of the Jenson. Fortunately, a light weight is available that is a better match. The two typefaces have a slightly different x-height, so the point size of the italic had to be be cheated a bit, too; but that’s a minor fix.

With modern software, it is easy enough to set up character styles that make the mixing an matching of fonts from different typeface families fairly easy to do. The typographer has to take some care to set this up correctly; but after that, the work goes smoothly.

Details matter. History matters.

Goudy’s Deepdene italic, as you can see, is much narrower and more upright than the Jenson italic, but the letterforms are of the same general historic period and they complement the Jenson roman every bit as well as the so-called Jenson italic. It’s also a bit lighter in weight than the standard weight of the Jenson. Fortunately, a light weight is available that is a better match. The two typefaces have a slightly different x-height, so the point size of the italic had to be be cheated a bit, too; but that’s a minor fix.

With modern software, it is easy enough to set up character styles that make the mixing an matching of fonts from different typeface families fairly easy to do. The typographer has to take some care to set this up correctly; but after that, the work goes smoothly.

Details matter. History matters.

Goudy’s Deepdene italic, as you can see, is much narrower and more upright than the Jenson italic, but the letterforms are of the same general historic period and they complement the Jenson roman every bit as well as the so-called Jenson italic. It’s also a bit lighter in weight than the standard weight of the Jenson. Fortunately, a light weight is available that is a better match. The two typefaces have a slightly different x-height, so the point size of the italic had to be be cheated a bit, too; but that’s a minor fix.

With modern software, it is easy enough to set up character styles that make the mixing an matching of fonts from different typeface families fairly easy to do. The typographer has to take some care to set this up correctly; but after that, the work goes smoothly.

Details matter. History matters.

Subscribe to:

Posts (Atom)